When it comes to the fine art of Search Engine Optimization (SEO), the team at Ninthlink is always on top of new trends and changes in the digital realm and how people use search engines in today’s culture. The methods people use to find the things they are interested in knowing about, and interested in buying, is the key to meta tagging and making SEO work best for businesses to enhance an online presence.

Here Come the Bots

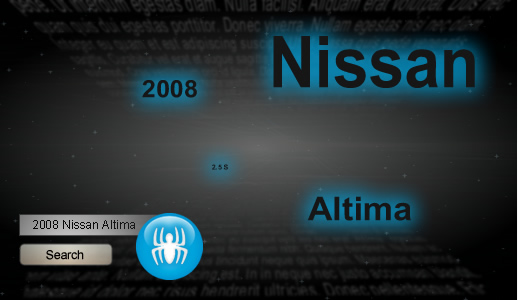

All search engines use robots—also called spiders and “bots”—which are programs that scour and travel through cyberspace seeking information in a variety of ways, manly based on website content and words that have been meta-tagged. The experts will say that key to grabbing the bots’ best attention is specific detail in content wording. An example would be Nissan—a person does not simply type in “Nissan” but will type in a certain make and model, color, as well as features of the car they want information about, or are interested in purchasing. Each of these items must be optimized for beneficial return hits from search engines, so this potential customer knows exactly where to find (and buy) the item they are interested in.

Habits Change

People’s web searching habits change. They may start out seeking basic information on a product, business, or idea; several months later, they will search for the same item or product, but add in different parameters and data to the search. At one point, the person doing a search is merely curious; at another point, now well-informed, this same person is ready to transform a customer.

Here is what wikipedia.org has to say: “Webmasters and content providers began optimizing sites for search engines in the mid-1990s, as the first search engines were cataloging the early Web. Initially, all a webmaster needed to do was submit a page, or URL, to the various engines which would send a spider to ‘crawl’ that page, extract links to other pages from it, and return information found on the page to be indexed. The process involves a search engine spider downloading a page and storing it on the search engine’s own server, where a second program, known as an indexer, extracts various information about the page, such as the words it contains and where these are located, as well as any weight for specific words and all links the page contains, which are then placed into a scheduler for crawling at a later date.”

When creating SEO data for Ninthink clients, Ron Weber says that he must take into consideration the client’s goals, how much web traffic the client is currently getting, and how much traffic the client seeks to gain. “What is the market, who are the customers out there, and what type of criteria are these costumers using to find what they need,” Weber says are the parameters used when Ninthlink optimizes a client’s site.

End of Summer Reading

Some informative books on SEO are:

Excellent post guys!

This is something I was just talking about to my director about spiders and SOE.

I am going to pass this article onto my team here at my company so they can see what I’m talking about in more depth 🙂

Thanks!!